Last Updated on 08/03/2025 by Grant Little

What is a Turing PI 2?

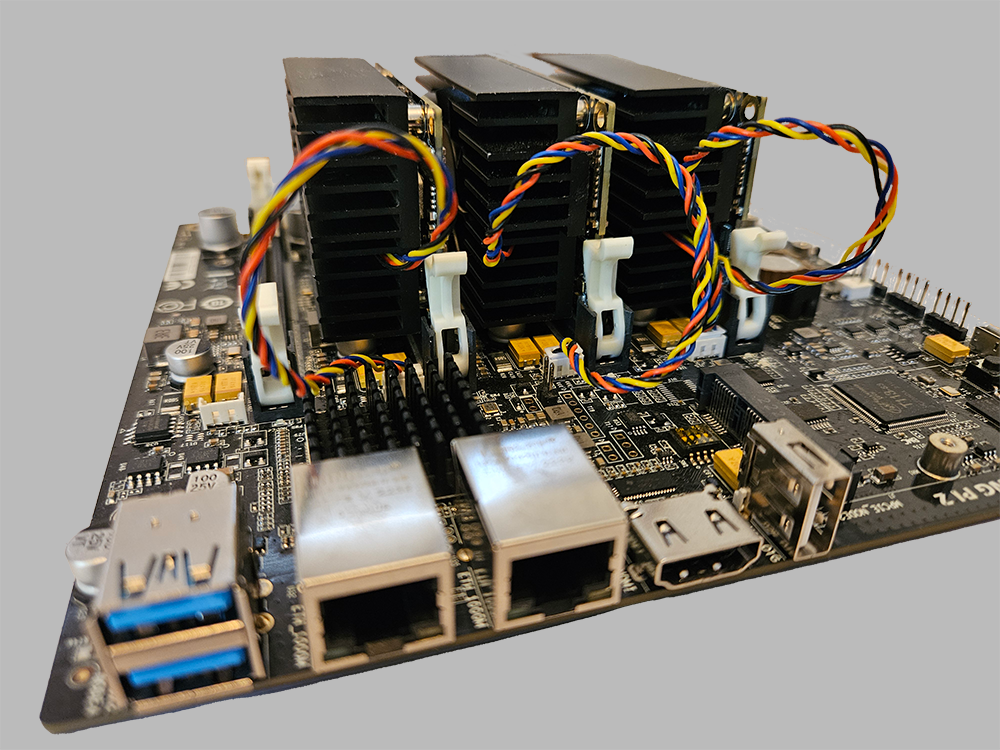

The Turing PI 2 is a compact, modular computing cluster board designed to host up to four Raspberry Pi Compute Module 4 (CM4) or compatible Rockchip RK1-based compute modules. It serves as a cost-effective, power-efficient, and highly scalable solution for those interested in distributed computing, edge computing, and self-hosted infrastructure.

Unlike traditional single-board computers (SBCs) that operate as standalone units, the Turing PI 2 integrates multiple compute modules into a single system, allowing users to create micro-clusters for development, learning, or small scale production use cases.

It features a mini-ITX form factor, onboard networking, and expansion options that make it ideal for a variety of applications.

Why Choose Turing PI 2 for a Home Lab?

The home lab community is always on the lookout for powerful yet energy-efficient solutions for running self-hosted applications, experimenting with distributed systems, and learning advanced IT concepts. The Turing PI 2 offers a compelling alternative to traditional x86-based server hardware by providing:

- Lower Power Consumption – Compared to traditional servers, a Turing PI 2 cluster consumes significantly less power, making it ideal for 24/7 operation.

- Modularity & Scalability – Users can start with just a single CM4 or RK1 module and expand over time.

- Compact Size – The mini-ITX form factor allows for easy deployment in small spaces, including home offices and labs.

- Cost-Effectiveness – When compared to purchasing multiple single-board computers and networking them separately, the Turing PI 2 provides an integrated, lower-cost solution.

- Silent and Heat-Efficient – Unlike traditional rack-mounted servers that generate significant heat and noise, the Turing PI 2 runs cool and quietly, making it a great addition to any home environment.

- Versatile Deployment – It supports various workloads, from running lightweight services to more complex containerized applications, making it a flexible choice for different home lab setups.

Advantages of the Turing PI 2

- Energy Efficiency – With ARM-based compute modules, the cluster consumes a fraction of the power required by traditional x86-based servers.

- Centralized Management – The board provides unified power, networking, and management interfaces, simplifying cluster setup.

- Ideal for Learning & Development – Perfect for those interested in Kubernetes, Docker, and other distributed systems without investing in expensive hardware.

- Supports Multiple Compute Architectures – With both CM4 and RK1 module compatibility, users have flexibility in choosing compute power and storage configurations.

- Quiet Operation – Unlike traditional server racks with noisy fans, the Turing PI 2 runs passively or with minimal cooling requirements.

- Expandable Networking – While the onboard networking is sufficient for basic use cases, advanced users can connect additional USB-to-Ethernet adapters for more robust networking setups.

- Flexible Storage Options – While each CM4 or RK1 module has limited onboard storage, the availability of M2, microSD, eMMC, SATA compatibility and USB storage expansion allows users to build larger storage capacities for their applications.

- Great for Edge Computing – With its ability to run AI/ML workloads using Jetson modules, the Turing PI 2 can serve as a small-scale edge computing device.

- Optimized for ARM-Based Software – With a growing ecosystem of ARM-based software, including Linux distributions, databases, and cloud-native applications, the Turing PI 2 provides a robust platform for developers and enthusiasts.

Disadvantages of the Turing PI 2

- ARM Compatibility Issues – Some applications may not have ARM-native builds, requiring workarounds such as emulation.

- Limited Compute Power – Compared to x86-based systems, CM4 and RK1 modules are less powerful, making them unsuitable for heavy workloads.

- Networking Limitations – While the board has an onboard switch, high-bandwidth applications may require additional networking solutions.

- Storage Constraints – CM4 modules have limited onboard storage, and while external drives can be attached, performance may be limited.

- Limited Memory Capacity – The maximum RAM available per module is constrained compared to modern x86 systems, which could be a bottleneck for memory-intensive applications.

- Software Ecosystem Challenges – While ARM software support has improved, some enterprise software, games, or proprietary applications may not have ARM-native versions.

- Lack of Native GPU Support – While RK1 modules provide sufficient compute power for many tasks, they lack dedicated GPU acceleration unless a Jetson module is used.

- Power Supply Considerations – A high-quality power supply is needed to ensure stable performance, particularly if running multiple high-performance modules.

Example Use Cases

The Turing PI 2 can be used for a variety of clustered computing applications in a home lab, including:

1. Running Docker Swarm

Docker Swarm allows you to run and orchestrate containerized applications across multiple nodes. A Turing PI 2 cluster is an excellent environment for:

- Self-hosting applications like Nextcloud, Home Assistant, or media servers.

- Testing and deploying microservices.

- Learning container orchestration concepts.

- Running monitoring tools like Prometheus and Grafana.

2. Running Kubernetes

For users interested in Kubernetes, the Turing PI 2 provides an affordable platform to experiment with cluster management, scaling, and cloud-native applications. A Kubernetes cluster on the Turing PI 2 can support:

- Self-hosted GitOps pipelines.

- AI/ML workloads using ARM-compatible frameworks.

- Distributed databases like etcd or PostgreSQL.

- Running serverless computing frameworks like OpenFaaS or Apache OpenWhisk.

- IoT device management through Kubernetes-based edge computing.

3. AI and Machine Learning with Jetson Modules

One of the standout capabilities of the Turing PI 2 is its support for NVIDIA Jetson modules, which bring AI and machine learning capabilities to the cluster. A potential configuration includes using three RK1 modules for general-purpose computing while dedicating one Jetson module for AI workloads. This setup enables:

- Running LLMs Locally – With a Jetson module installed, you can deploy Ollama to experiment with running local large language models (LLMs) optimized for ARM-based GPUs.

- Edge AI Applications – Run computer vision models using TensorRT and deploy applications that process video streams in real-time.

- AI-Accelerated Kubernetes Workloads – Use Kubernetes to schedule machine learning tasks onto the Jetson node while offloading general application tasks to the RK1 nodes.

- Federated Learning & Distributed Training – Combine the Jetson’s GPU with RK1 compute nodes to build decentralized AI training pipelines.

- Speech & Image Recognition – Deploy AI models for automated speech recognition (ASR) and object detection using Jetson’s onboard GPU acceleration.

- Robotics & Automation – Use Jetson modules to control robotic systems, drones, and autonomous navigation applications.

My Use Cases

I currently have three RK1 Compute Modules installed. Specifically, I wanted three nodes to allow testing of distributed applications that require quorum.

I run a three-node Kubernetes cluster on these devices.

I also run Docker and Docker Swarm across all three nodes. Honestly, I find I use the simplicity of Docker Swarm more than Kubernetes, unless there is a HELM chart already available for the applications I want to use.

This setup allows me to quickly deploy various applications and experiment with them to gain a better understanding. Importantly, it also allows me to easily destroy those application environments and try something new or different without much hassle.

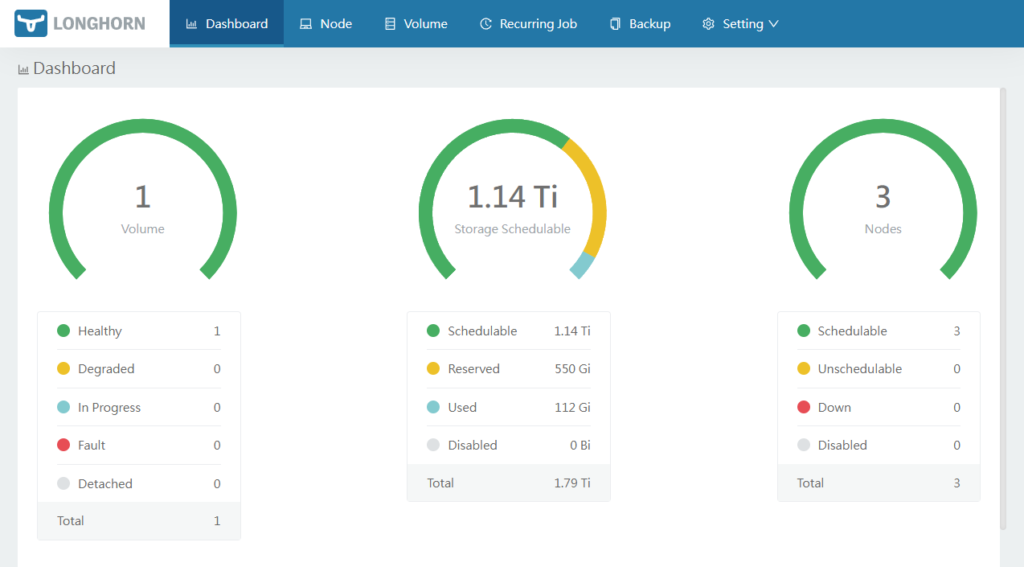

The documentation provides reasonably good procedures for installing applications like Kubernetes and Docker Swarm. It includes installing Longhorn for Storage and ArgoCD for example.

However, I have taken it a step further and created Ansible Playbooks that initialize the RK1 compute modules and install all relevant software. This automation enables me to effortlessly recreate my test lab whenever needed.

I’m hoping to add a Nvidia Jetson module to test and integrate some ML and LLM capabilities into applications.

Conclusion

The Turing PI 2 is a game-changer for home lab enthusiasts who want a low-power, modular, and scalable system for experimenting with cluster computing.

While it has some limitations compared to traditional x86-based setups, its affordability, efficiency, and flexibility make it an attractive choice. The ability to integrate Jetson modules for AI workloads further extends its capabilities, making it a powerful choice for both self-hosted applications and machine learning experimentation. If you’re looking to build a versatile cluster at home, the Turing PI 2 is an excellent starting point.